Fleek Network Testnet Phase {3}: Performance Results

Fleek Network Testnet Phase {3}, which concluded on February 14th, was a key event in showcasing the network's edge computing features and establishing a foundation for future services and use cases. Phase {3} offered developers the first real opportunity to experiment with Fleek Network’s capabilities and get a feel for its performance in a more hands-on environment, through the deployment of edge-optimized JavaScript Functions.

552 functions were deployed by the community through the code playground, with a total of 9,342 calls made during the two-week testnet. Parallel to the public activity, the core team deployed functions and did substantial internal testing to collect the data needed for this early performance test. The results collected during Phase {3} show substantial improvement over previous testnets and showcase that Fleek Network deployed edge functions can be significantly more performant than traditional cloud platforms like AWS Lambdas and Vercel Serverless.

Testnet Phase {3}: Results

Phase {3} Performance Results TLDR:

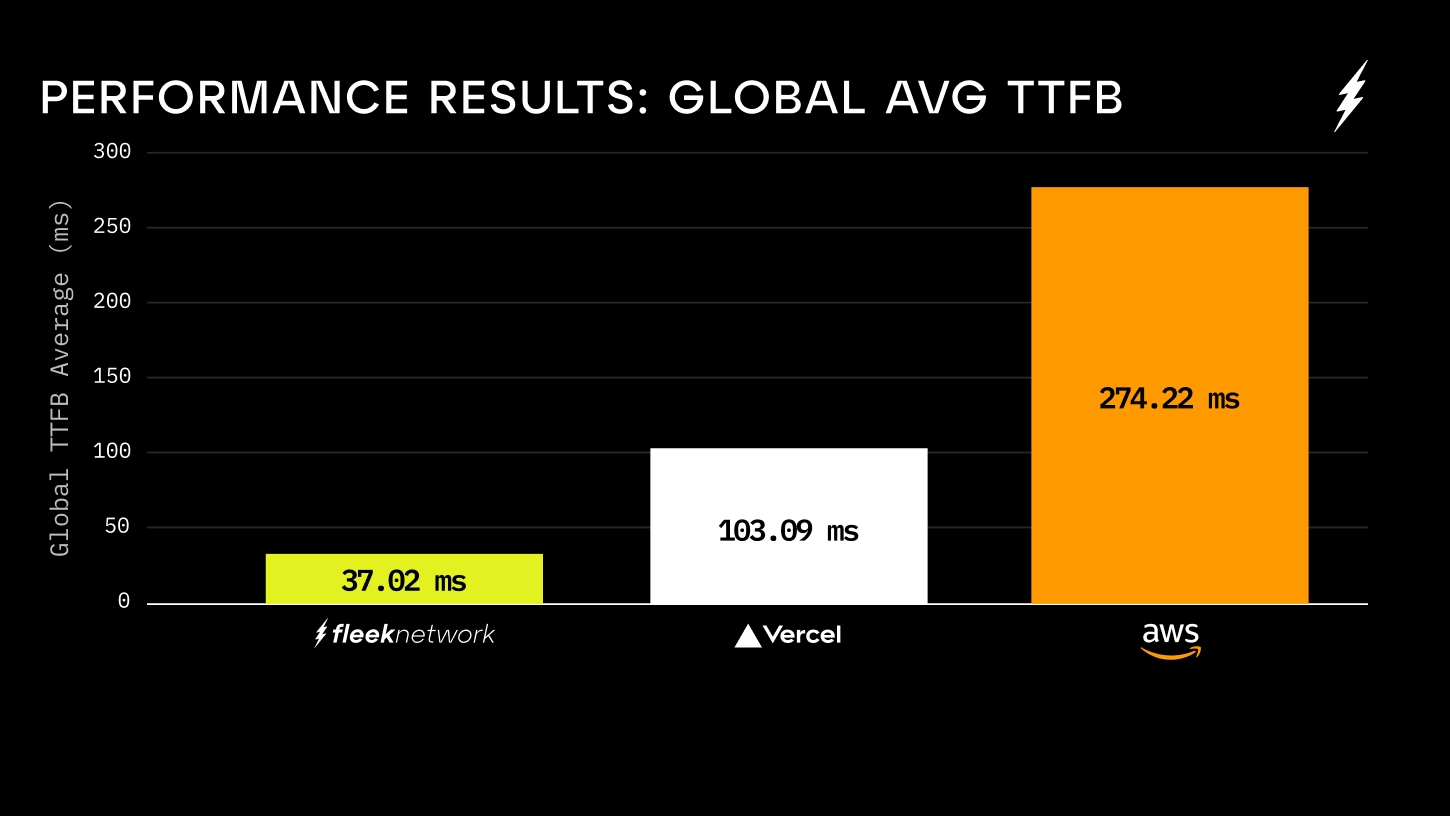

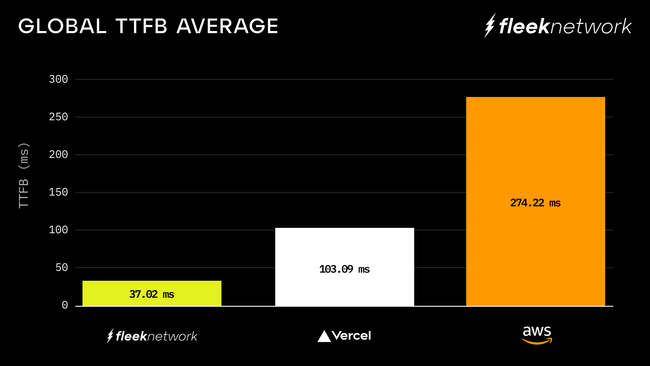

- Fleek Network deployed edge functions saw an average global TTFB of 37.02ms including the TLS handshake, 7x faster than AWS Lambdas (274.22ms), and 2.7x faster than Vercel Serverless (103.09ms) in global testing.

- If SA-East is excluded, where no nodes were running during this testnet, Fleek Network average global TTFB was 24.95ms.

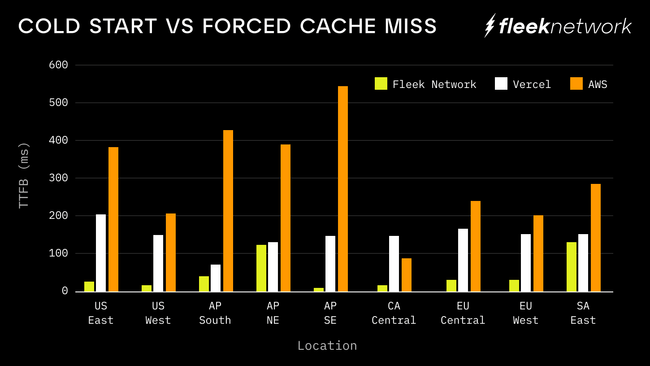

- In forced cache missed simulations Fleek Network deployed edge functions saw an average global TTFB of 50.40ms including the TLS handshake, 6x faster than AWS Lambdas cold start (309.53ms), and 3x faster than Vercel Serverless cold start (154.30ms)

- The testing environment used to collect these results is available through this open-source repository for anyone who wants to verify the findings

- This was a basic test to prove concept and show progress as we develop the protocol. There are other ways to benchmark edge compute performance beyond TTFB.

Read on for more info on the testing methodology and performance results from Phase {3}:

Testnet Phase {3}: Methodology

The goal of this phase was to demonstrate real-world edge computing use cases running performantly on Fleek Network. In order to successfully demonstrate that, Fleek Network deployed functions would need to be benchmarked against leading Web2 alternatives. For this test, AWS Lambda and Vercel Serverless were used for the benchmarking.

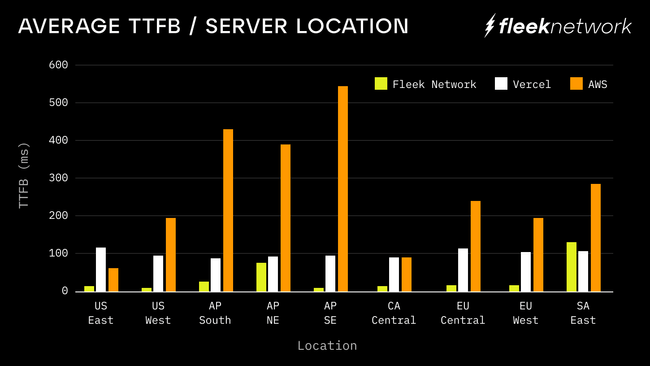

The test was conducted by deploying the same javascript function across each provider tested: Fleek Network Edge Functions, AWS Lambdas, and Vercel Serverless. This was done across ten global client servers located across different AWS regions around the globe, where the client servers would invoke this function and report Time to First Byte (TTFB) in milliseconds:

const main = () => {

// The number we return was randomly generated and

// hard coded into the function when deployed

return number

}

The reason for the random hard-coded number is so the function would have a unique CID hash. This ensured that the function would not be cached in the Fleek Network nodes from a previous test.

The function was invoked thousands of times across each provider from each of the 10 different testing regions. To give a summary of the results, this report shows the global average TTFB for each service provider as well as the regional average TTFB across the 10 different testing locations, beginning from a cold start for every location. In all simulations, time for the TLS handshake was also accounted for.

Client servers were located in the following regions: US-EAST (Northern Virginia), US-WEST (Northern California), AP-SOUTH (Mumbai), AP-NORTHEAST (Tokyo), AP-SOUTHEAST (Singapore), CA-CENTRAL (Montreal), EU-CENTRAL (Frankfurt), EU-WEST (Ireland), SA-EAST (São Paulo)

The testing methodology also features a separate evaluation of cache miss simulations, which would be a closer comparison to AWS or Vercel’s cold start. This test assesses the network's performance when requested data is not already stored in the cache. To simulate the network’s ability to fetch the file from its origin, IPFS in this case, a series of tests were run where the file was guaranteed not to be previously cached in the network.

Helpful Context:

When interpreting the results from this recap, take into consideration that AWS Lambdas need to be deployed to specific regions and have no concept of geolocation or smart routing – all requests made worldwide go to the same region where the lambda is deployed, New York City in this case. If the results were only collected from the US-EAST region where the Lambda is the closest, it would have a regional average TTFB of 64ms. But if you wanted to achieve better speeds globally for lambdas you would need to add geo-routing by using a separate solution like Amazon Cloudfront.

Since ‘Lambda + Cloudfront’ is essentially what Vercel Serverless is, testing with Lambdas and Vercel Serverless was sufficient for these early benchmarking purposes. Vercel Serverless was used as the GeoRouting-enabled alternative to AWS Lambdas. Requests in the Vercel part of the test were sent to the closest server, instead of the one it is preconfigured to like with AWS Lambdas. This provides a much closer apples-to-apples comparison between Fleek Network and an alternative with Smart Routing and Geolocation enabled, as opposed to AWS Lambdas which are not geo-optimized.

How To Reproduce Results:

The Foundation and core development team acknowledge that metrics and methodology can always be skewed to achieve results that highlight the protocol in the best possible way. To make Phase {3}’s results as transparent as possible, the testing environment used to collect metrics has been open-sourced and is available here. Follow the guide in the README to run the test and verify the following results independently.

Anyone with the capabilities to replicate the testing methodology is encouraged to do so and share their results with the Foundation and community.

What’s Next: Phase {4} Preview

Looking ahead, the core developers are beginning to build on these results and prepare for Phase {4}, the first long-standing, stable testnet phase. Phase {4} is currently planned to begin in late April, although the exact start date will be determined by developer timelines. A key part of Phase {4} will be the ability for developers to create and deploy their own infrastructure, services, and apps on (or leveraging) Fleek Network. More details will be provided in the coming weeks and throughout the leadup to the start of Phase {4}.

In terms of node operation, at a certain point during Phase {4} a select number of node operators will be admitted as part of a genesis group due to the need to ensure the network’s performance, quality, and reliability during this final testnet phase. This is an important step considering the recent change in node requirements. Expect to see more details released in March in regards to the next steps for those interested in participating as node operators.

That’s all for Fleek Network Testnet Phase {3}. The core team is extremely excited about this early performance showcase, and can’t wait to be back for Phase {4} with new protocol functionality, services, use cases, and performance improvements.

The Foundation would like to thank everyone who tried the Edge Function Playground and contributed to the success of Phase {3} while it was live!

More Phase {4} details will be provided in the coming weeks for both node operators and developers interested in building on or using Fleek Network.

Follow along on X for the latest on Fleek Network’s rollout ⚡

-Fleek Foundation